- Second version of our EVREAL paper, which includes updated results, is published at arXiv.

- Codes for robustness analysis, downstream tasks, and color reconstruction are published. Please see the GitHub repository for instructions and example commands.

- Evaluation codes are published now. Please see the GitHub repository for instructions on installation, dataset preparation and usage. (Codes for robustness analysis and downstream tasks will be published soon.)

- In our result analysis tool, we also share results of a new state-of-the-art model, HyperE2VID, which generates higher-quality videos than previous state-of-the-art, while also reducing memory consumption and inference time. Please see the HyperE2VID webpage for more details.

- The web application of our result analysis tool is ready now. Try it here to interactively visualize and compare qualitative and quantitative results of event-based video reconstruction methods.

- We will present our work at the CVPR Workshop on Event-Based Vision in person, on the 19th of June 2023, during Session 2 (starting at 10:30 local time). Please see the workshop website for details.

EVREAL: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction

1 Hacettepe University, Computer Engineering Department

2 HAVELSAN Inc.

3 Koç University, Computer Engineering Department 4 Koç University, KUIS AI Center

3 Koç University, Computer Engineering Department 4 Koç University, KUIS AI Center

News

Results

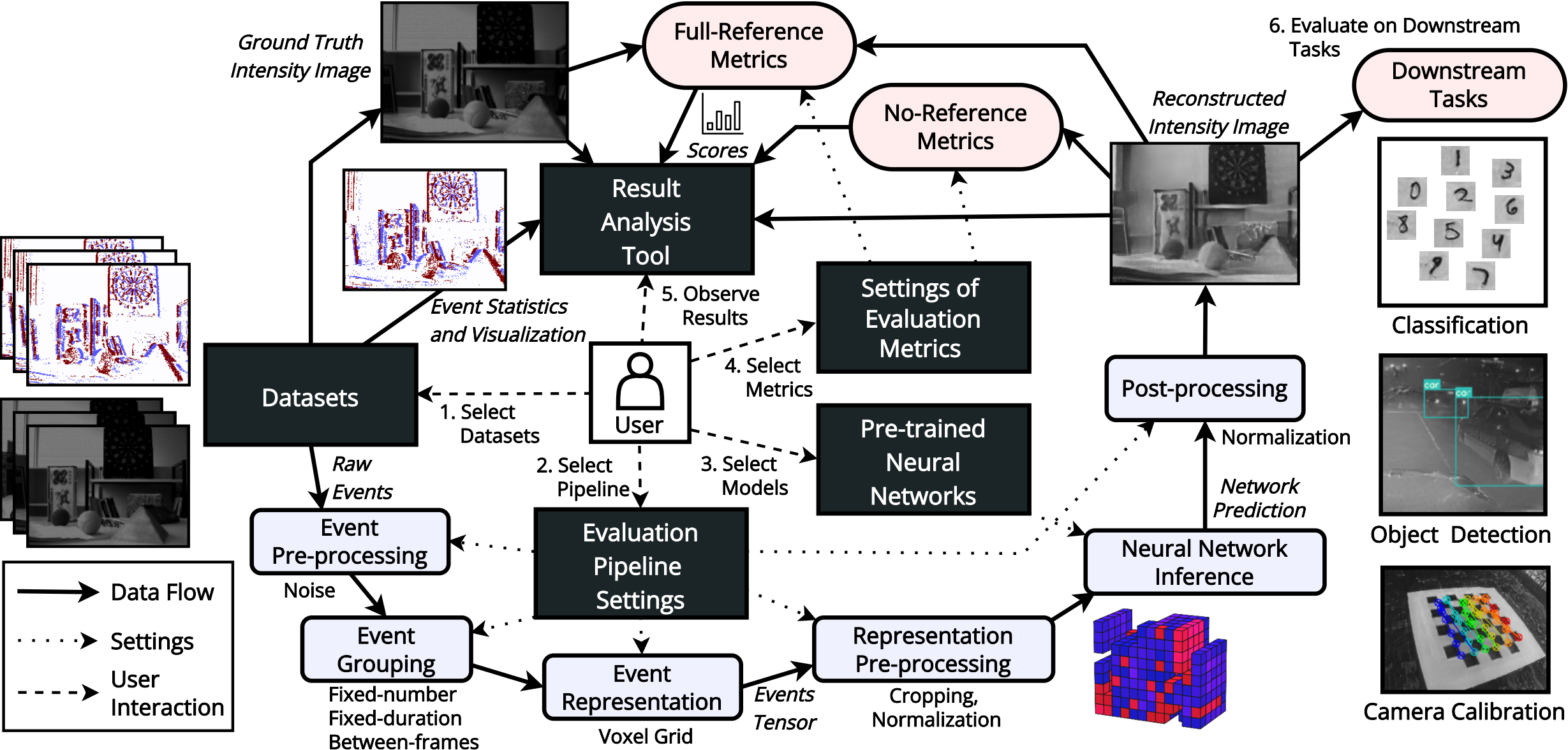

Web app of result analysis tool to interactively visualize and compare results of event-based video reconstruction methods:

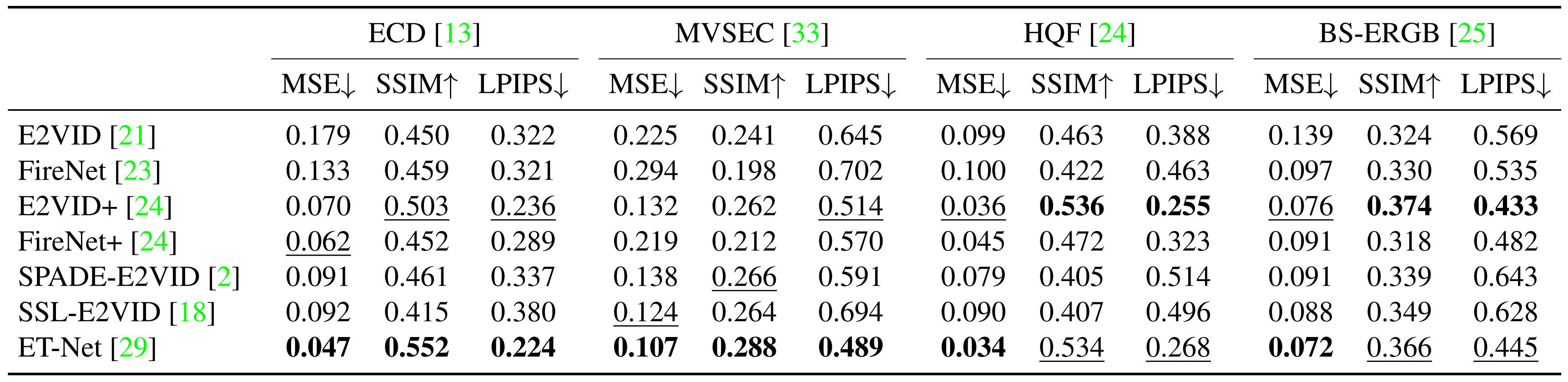

Full-reference quantitative results on the ECD, MVSEC, HQF, and BS-ERGB datasets:

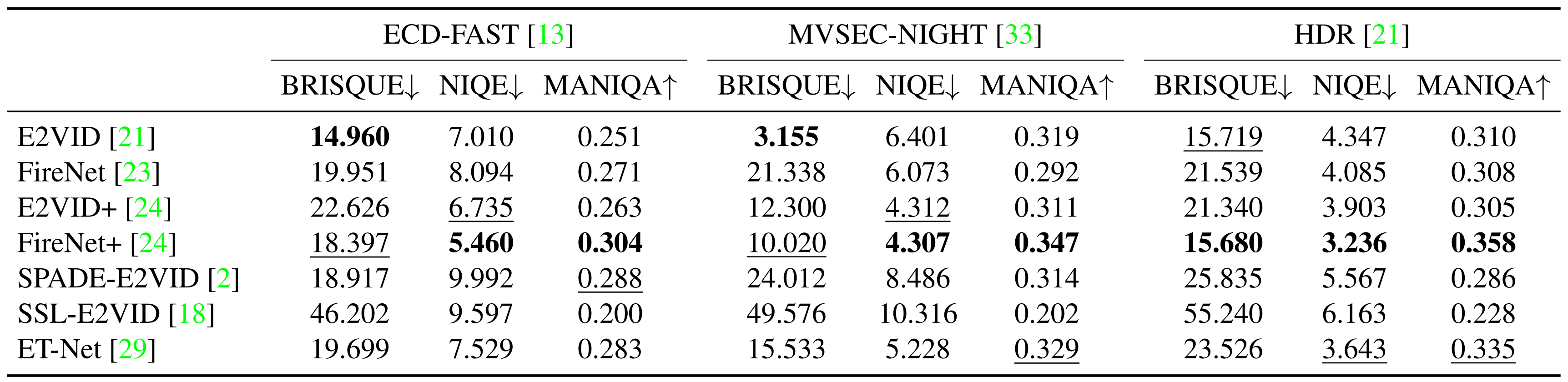

No-reference quantitative results on challenging sequences involving fast motion, low light, and high-dynamic range:

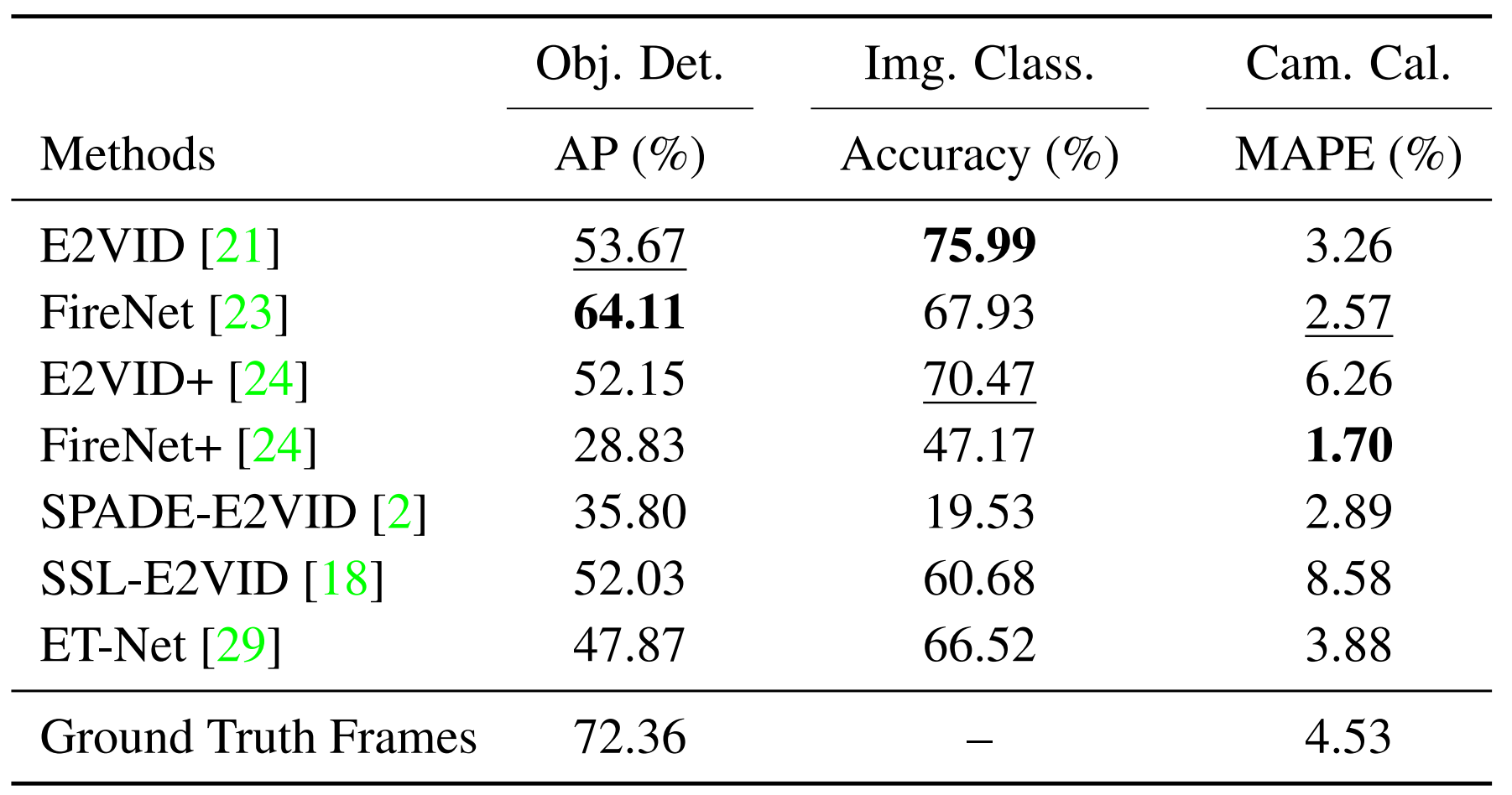

Quantitative results on downstream tasks:

BibTeX

@inproceedings{ercan2023evreal,

title={{EVREAL}: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction},

author={Ercan, Burak and Eker, Onur and Erdem, Aykut and Erdem, Erkut},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month={June},

year={2023},

pages={3942-3951}} Acknowledgements

This work was supported in part by KUIS AI Center Research Award, TUBITAK-1001 Program Award No. 121E454, and BAGEP 2021 Award of the Science Academy to A. Erdem.